What's x264(H.264/AVC)?

X264 To X265 Converter

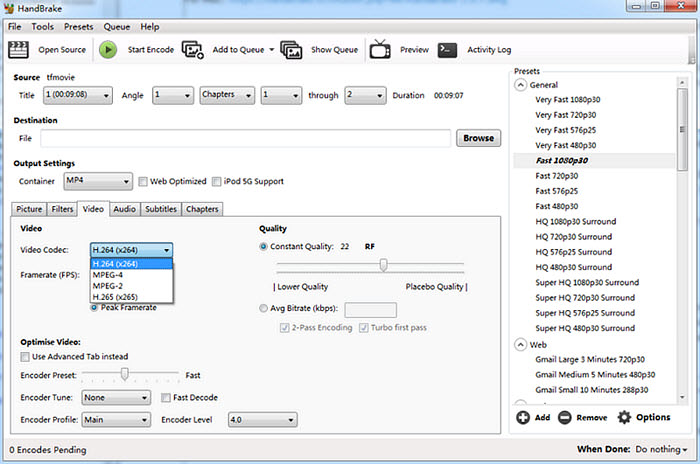

As other posters have said, x264 and x265 are the names for the open source implementations of the two codecs. They are proudly part of LibAVCodec that is used in applications like HandBrake, FFMPEG, and VLC. The short answer is the H.265 (x265) is newer and will give you better quality at the same size (bitrate) as H.264 (x264). X264 is just one of the few software libraries that you can use to encode videos into H.264. X264 boasts to be the best H.264 encoder in existence, even surpassing those that are being commercially sold. X264 is only necessary when you are encoding the video from any format to H.264.

x264 is a free software library developed by VideoLAN for encoding video streams into the H.264/MPEG-4 AVC format. Usually we confused x264 with H.264, which is not wrong, but not accurate as well. Actually, H.264 is a specification for compressing video, aka MPEG-4 part 10 or AVC; while x264 is a very high quality encoder that produces remarkable quality H.264 compatible video-stream. It is almost exclusively used by all the open source video platforms like ffmpeg, gstreamer, handbrake etc. In short, H264 is a format, and X264 is a software library to create H264 files.

What's x265(H.265/HEVC)?

Handbrake X264 Vs X265

And x265 is a free software library and application for encoding video streams into the H.265/MPEG-H HEVC compression format, and is released under the terms of the GNU GPL. From the definition, we got to know x265 is a successor to x264. Similarly, there are also confusion with x265 and H.265, which goes the same with x264 vs H.264. However, in daily life, it's not that exact for x264 vs x265 and H.264 and H.265. That is to say, we usually lumped together H.264 vs H.265 and x264 vs x265 comparison.

X264 Vs X265 Quality

By Tom Vaughan

X264 Vs X265 Codec

Whether you want to compare two encoders, or compare different settings for the same encoder, it’s important to understand how to set up and run a valid test. These guidelines are designed to allow anyone to conduct a good test, with useful results. If you publish the results of an encoder comparison and you violate these rules, you shouldn’t be surprised when video professionals point out the flaws in your test design.

X265 Vs X264 Reddit

- You must use your eyes. Comparing video encoders without visually comparing the video quality is like comparing wines without tasting them. While it’s tempting to use mathematical quality metrics, like peak signal to noise ratio (PSNR), or Structural Similarity (SSIM), these metrics don’t accurately measure what you are really trying to judge; subjective visual quality. Only real people can judge whether test sample A looks better than test sample B, or whether two samples are visually identical. Video encoders can be optimized to produce the highest possible PSNR or SSIM scores, but then they won’t produce the highest possible visual quality at a given bit rate. If you publish PSNR and SSIM values, but you don’t make the encoded video available for others to compare visually, you’re not conducting a valid test at all.

Note: If you’re running a test with x264 or x265, and you wish to publish PSNR or SSIM scores (hopefully in addition to and not instead of conducting subjective visual quality tests), you MUST use –tune PSNR or –tune SSIM, or your results will be completely invalid. Even with these options, PSNR and SSIM scores are not a good way to compare encoders. x264 and x265 were not optimized to produce the best PSNR and SSIM scores. They include a number of algorithms that are proven to improve subjective visual quality, while at the same time reducing PSNR and SSIM scores. Only subjective visual quality, as judged by real humans matters.

Of course subjective visual quality testing is very time consuming. But it’s the only valid method for true comparison tests. If, however, you have a very large quantity of video content, and you need to compare the quality of content A against content B, or set up an early warning indicator in an automated quality control system, objective metrics are useful. Netflix has done some valuable work in this area, and we would recommend their VMAF (Video Multimethod Assessment Fusion) metric as the best available today. At best, objective metric scores should be considered only a rough indication of visual quality. - Video must be evaluated as video, not still frames. It’s relatively easy to compare the visual quality of two decoded frames, but that’s not a valid comparison. Video encoders are designed to encode moving images. Things that are obvious when you are examining a single frame may be completely invisible to any observer when viewed as part of a sequence of images at 24 frames per second or faster. Similarly, you’ll never spot motion inaccuracy or other temporal issues such as pulsing grain or textures if you’re only comparing still frames.

- Use only the highest quality source sequences. A source “sequence” is a video file (a sequence of still pictures) that will serve as the input to your test. It’s important for your source video files to be “camera quality”. You can’t use video that has already been compressed by a camcorder, or video that was uploaded to a video sharing site like YouTube or Vimeo that compresses the video to consumer bit rates so that it can be streamed efficiently from those websites. Important high frequency spatial details will be lost, and the motion and position of objects in the video will be inaccurate if the video was already compressed by another encoder.

In the early days of digital video, film cameras were able to capture higher quality images than video cameras, and so the highest quality source sequences were originally shot with film movie cameras, and then scanned one frame at a time. Today, high quality digital video cameras are able to capture video images that rival the highest quality film images. Modern professional digital video cameras can either record uncompressed (RAW) or very lightly compressed high bit rate (Redcode, CinemaDNG, etc.) video, or transfer uncompressed video via HDMI or SDI to an external recording device (Atomos Shogun, Blackmagic Video Assist), which can store the video in a format that utilizes very little video compression (ProRes HQ, DNxHD, DNxHR). Never use the compressed video from a consumer video camera (GoPro, Nikon, Canon, Sony, Panasonic, etc.). The quality of the embedded video encoder chips in consumer video cameras, mobile devices and DSLRs is not good enough. To test video encoders, you need video that does not already include any video compression artifacts. - Use a variety of source sequences. You should include source video that is a representative sample of all of the scenarios that you are targeting. This may include different picture sizes (4K, 1080P, 720P, etc), different frame rates, and different content types (fixed camera / talking heads, moving camera, sports/action, animation or computer generated video), and different levels of complexity (the combination of motion and detail).

- Reproducibility matters. Ideally, you should choose source sequences that are available to others so that they can replicate your test, and reproduce and validate your results. A great source of test sequences can be found at https://media.xiph.org/video and https://media.xiph.org/video/derf/. Otherwise, if you are conducting a test that you will publish and you have your own high quality source sequences, you should make them available for others to replicate your test.

- Speed matters. Video encoders try many ways to encode each block of video, choosing the best one. They can be configured to go faster, but this will always have a trade-off in encoding efficiency (quality at a given bit rate). Typically, encoders provide presets that enable you to choose a point along the speed vs. efficiency tradeoff function (x264 and x265 provide ten performance presets, ranging from –preset ultrafast to –preset placebo). It’s not a valid test to compare two encoders unless they are configured to run at a similar speed (the frames per second that they encode) on identical hardware systems. If encoder A requires a Supercomputer to compare favorably with encoder X running on a standard PC or server, or if both encoders are not tested with similar configurations (fast, medium, or slow/high quality), the result is not valid.

- Eliminate confounding factors. When comparing encoding performance (speed), it’s crucial to eliminate other factors, such as decoding, multiplexing and disk I/O. Encoders only take uncompressed video frames as input, so you can decode a high quality source sequence to raw YUV, storing it on a fast storage system such as an SSD or array of SSDs so that I/O bandwidth will be adequate to avoid any bottlenecks.

- Bit Rate matters. If encoders are run at high bit rates, the quality may be “visually lossless”. In other words, an average person will not be able to see any quality degradation between the source video and the encoded test video. Of course, it isn’t possible to determine which encoder or which settings are best if both test samples are visually lossless, and therefore, visually identical. The bit rates (or quality level, for constant quality encoding) you chose for your tests should be in a reasonable range. This will vary with the complexity of the content, but for typical 1080P30 content, for HEVC encoder testing, you should test at bit rates ranging roughly from 400 kbps to 3 Mbps, and for 4K30 you should cover a range of roughly 500 kbps to 15 Mbps. It will be easiest to see the differences at low bit rates, but a valid test will cover the full range of quality levels applicable to the conditions you expect the encoder to be used for.

- Rate Control matters. Depending on the method that the video will be delivered, and the devices that will be used to decode and display the video, the bit rate may need to be carefully controlled in order to avoid problems. For example, a satellite transmission channel will have a fixed bandwidth, and if the video bit rate exceeds this channel bandwidth, the full video stream will not be able to be transmitted through the channel, and the video will be corrupted in some way. Similarly, most video is decoded by hardware video decoders built into the device (TV, PC, mobile device, etc.), and these decoders have a fixed amount of memory to hold the incoming compressed video stream, and to hold the decoded frames as they are reordered for display. Encoding a video file to an overall average target bit rate is relatively easy. Maintaining limits on bit rate throughout the video, so as not to overfill a transmission channel, or overflow a video decoder memory buffer is critical for professional applications.

- Encoder Settings matter. There are many, many settings available in a good video encoder, like x265. We have done many experiments to determine the optimal combination of settings that trade off encoder speed for encoding efficiency. These 10 performance presets make it easy to run valid encoder comparison tests. If you are comparing x265 with another encoder, and you believe you have the need to modify default settings, contact us to discuss your test parameters, and we’ll give you the guidance you need.

- Show your work. Before you believe any published test or claim (especially from one of our competitors), ask for all of the information and materials needed to reproduce those results. It’s easy to make unsubstantiated claims, and it’s easy for companies to run hundreds of tests, cherry-picking the tests that show their product in the most favorable light. Unless you are given access to the source video, the encoded bitstreams, the settings, the system configuration, and you are able to reproduce the results independently with your own test video sequences under conditions that meet your requirements, don’t believe everything you read.

- Speak for yourself. Don’t claim to be expert in the design and operation of a particular video encoder if you are not. Recognize that your experience with each encoder is limited to the types of video you work with, while encoders are generally designed to cover a very wide range of uses, from the highest quality archiving of 8K masters or medical images, to extremely low bit rate transmission of video through wireless connections. If you want to know an encoder can or can’t do, or how to optimize it for a particular scenario, you should ask the developers of that encoder.

X264 Vs X265 1080p

Hardware vs. Software encoders.

It is a bit silly to compare hardware encoders to software encoders. While it’s interesting to know how a hardware encoder compares to a software encoder at any given point in time on a given hardware configuration, there are vast differences between the two types of encoders. Each type has distinct advantages and disadvantages. Hardware encoders are not cross platform; they are either built in or added on to the platform. Hardware encoders are typically designed to run in real-time, and with lower power consumption than software encoders, but for the highest quality video encoding, hardware encoders can NEVER beat software encoders, because their algorithms are fixed (designed into the hardware), while software encoders are infinitely flexible, configurable and upgradeable. There are many situations where only a hardware encoder makes sense, such as in a video camera or cell phone. There are also many situations where only a software encoder makes sense, such as when it comes to high quality video encoding in the cloud, on virtual machines.